After exploring various aspect of programming, it's important to understand and calculate the amount of time our code takes to execute for a given size of input. The concept needed for this is called Time Complexity. In this article we will be exploring various aspects of Time Complexity and also solve numerous questions on it, so that we can have strong foundation of one of the most important aspect in the world of DSA.

Introduction

For a beginner the first question that should come to his/her mind is, "what is time complexity?". The answer is pretty simple, it is a measure of the amount of time an algorithm

takes to complete as a function of the size of the input. It is important to analyze the time complexity of an

algorithm because it helps us to compare different solutions and choose the

most efficient one for a given problem.

In simple words (just to understand), one way to measure the time complexity of an algorithm is to

count the number of iterations it performs. We can express the time complexity

of an algorithm as a function of n, where n is the input size...

Time complexity is commonly represented using big O

notation, which describes the upper bound of the growth rate of an algorithm's

running time.

For

example, consider the following algorithm that prints all elements of an array.

int print(int arr[], int n)

{

for(int i=0; i<n;i++)

System.out.println(arr[i]);

}

The

time complexity of this algorithm is O(n), where n is the length of the

array. This means that the algorithm performs n operations/iterations.

Classes of Time Complexity

Analyzing the time complexity of algorithms helps us

understand how well an algorithm will perform and scale as the size of the

input grows. This can help us choose the most efficient algorithm for a given

task. There

are different classes of time complexity that are commonly used to classify

algorithms, such as constant, logarithmic, linear, polynomial, exponential,

etc. Here, an algorithm with constant time complexity always takes

the same amount of time regardless of the input size, while an algorithm with

exponential time complexity takes exponentially more time as the input size

increases. To understand different classes time complexity let's take example on them.

Constant Time Complexity O(1):

int findfirst(int a[]){

return a[0];

}

The function takes constant time O(1) because it returns the element of the input array.

Linear Time Complexity O(n):

int findmax(int nums[])

{

int max=Integer.MIN_VALUE;

for(int i=0;i<n;i++)

{

if(max<a[i])

{

max=a[i];

}

return max;

}

The first line inside function takes constant time O(1). The for loop takes O(n) time when size of the input is n. The if statement and max assignment operation take constant time O(1). The return statement also takes constant time O(1). Therefore the time complexity of the algorithm is O(n).

Quadratic time complexity O(n^2):

void bubbleSort(int[] numbers) {

int n =

numbers.length;

for (int i = 0; i

< n - 1; i++) {

for (int j =

0; j < n - i - 1; j++) {

if

(numbers[j] > numbers[j + 1]) {

int

temp = numbers[j];

numbers[j] = numbers[j + 1];

numbers[j + 1] = temp;

}

}

}

}

The first line takes constant time O(1). The outer for loop takes O(n) time where n is the length

of the input array. The inner for loop takes O(n) time. The if statement and swap operation take constant time

O(1). Therefore the overall time complexity of the bubbleSort

function is O(n^2).

Logarithmic time complexity O(log n):

public static int binarySearch(int[] numbers, int target) {

int left = 0;

int right =

numbers.length - 1;

while (left <=

right) {

int mid = left

+ (right - left) / 2;

if

(numbers[mid] == target) {

return

mid;

} else if

(numbers[mid] < target) {

left = mid

+ 1;

} else {

right =

mid - 1;

}

}

return -1;

}

The first two lines take constant time O(1). The while loop takes O(log n) time where n is the length

of the input array. All other operations inside the loop take constant time

O(1). Therefore, the overall time complexity of the

binarySearch function is O(log n).

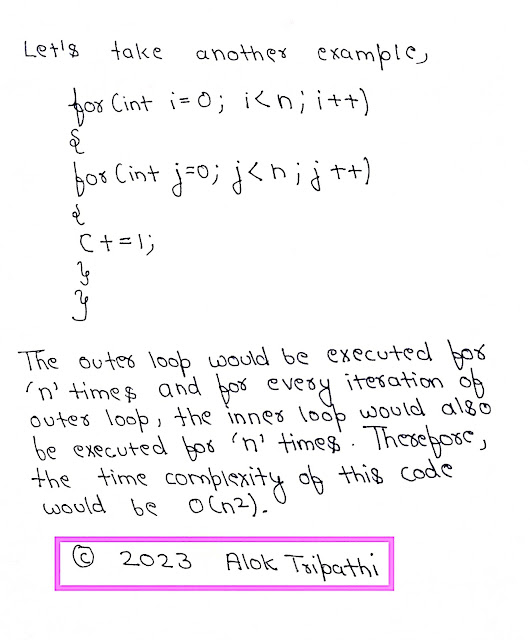

Let's take another example:

Linearithmic

time complexity O(n log n):

Practice Problems:

To strengthen our knowledge further in time complexity, let's try several problems:

Hope you liked this explanation, for any doubt/feedback/improvements/corrections you can comment down in the comment section.